Takeaways from ServerlessNYC 2018

ServerlessNYC featured a diverse set of speakers talking about all things serverless. In this post I summarize the key takeaways and lessons learned.

I had the opportunity to attend ServerlessNYC this week (a ServerlessDays community conference) and had an absolutely amazing time. The conference was really well-organized (thanks Iguazio), the speakers were great, and I was able to have some very interesting (and enlightening) conversations with many attendees and presenters. In this post I've summarized some of the key takeaways from the event as well as provided some of my own thoughts.

Note: There were several talks that were focused on a specific product or service. While I found these talks to be very interesting, I didn't include them in this post. I tried to cover the topics and lessons that can be applied to serverless in general.

Update November 16, 2018: Some videos have been posted, so I've provided the links to them.

Serverless isn't the future... because we can use it now! ⚡️

Kelsey Hightower gave the keynote and showed us how event-driven applications are akin to xinetd from the 1970s. We seem to find new ways to do the same thing, and serverless is no different. Like many speakers, he reinforced the idea that serverless lets us focus on "business logic" instead of infrastructure, but there are plenty of pros and cons. Below are some of his thoughts...

On serverless adoption...

Adoption is hurt by "over-promising" the capabilities of serverless. There's clearly an education gap when people think they can move their MongoDB to a Lambda function. As a serverless advocate, myself, I can see how things can be misinterpreted. We must do better.

On managed services...

Serverless relies heavily on managed services. But what do we do if a service goes away? Surely DynamoDB and Firebase won't disappear overnight, but API interfaces do change, and features are added and removed as cloud providers race to innovate. Maybe not the biggest concern, but something to think about.

On event-driven architectures...

What do we do when there is no event? We have to manufacture one. This was another interesting point that Kelsey made, although this is true for many traditional architectures as well. When services (such as for weather data) update information, they typically don't broadcast it to your application. This means that you are responsible for pinging their systems every so often to check for updates. If we move to an event-driven world, maybe there is an opportunity for robust pub/sub services.

On writing serverless functions...

Boiler plate doesn't go away. Functions become the main entry point, but you still need to include all your instrumentation, drivers, and dependencies. I probably have a minimum of ten things I need to add to every function before I even begin writing business logic. This can be partially solved by creating wrapper functions that incorporate most of your boilerplate code, but it does give you more complexity to manage.

On debugging...

Having distributed tracing is necessary. We need the ability to debug in these blackboxes. Monitoring serverless applications becomes more complex as we start adding additional managed services and more moving parts. It's possible for a single event to flow through several functions and services in order to complete the happy path. Without the ability to see where, why and when a part of this chain failed, debugging can become a Sherlock Holmes-worthy mystery.

On the advantage over microservices...

Scoped IAM roles per function gives you fine-grained control. Limiting the permissions of individual functions is certainly a major benefit, but the ability of functions to scale independently also ranks right up there. Traditional microservices are still mini-monoliths, which require scaling the entire service as well as broadly scoping permissions. Serverless lets us scale just the parts that need to and lock down permissions to minimize attack surface areas.

On enterprise adoption...

Focus on net new functionality that generates money. Enterprises have made massive investments in technology, and as a result, have years of legacy systems that they aren't just going to walk away from. Kelsey said that adopting serverless to add new services, or to expand services for new customers, adds value with minimal development and without touching the monolith. I think this is also a question of cloud adoption as many enterprises have yet to abandon their own on-prem solutions.

Unlimited still has limits, design for failure 💥

Jason Katzer, Director of Software Engineering at Capital One and owner of an amazing banana shirt, gave a talk (video here) on How to Fail Without Even Trying. His main point was that "failure" is the default nature of any system, therefore, you must design your system to break. This is the basic concept behind chaos engineering and becomes extremely important when building serverless architectures. Here are some of his thoughts.

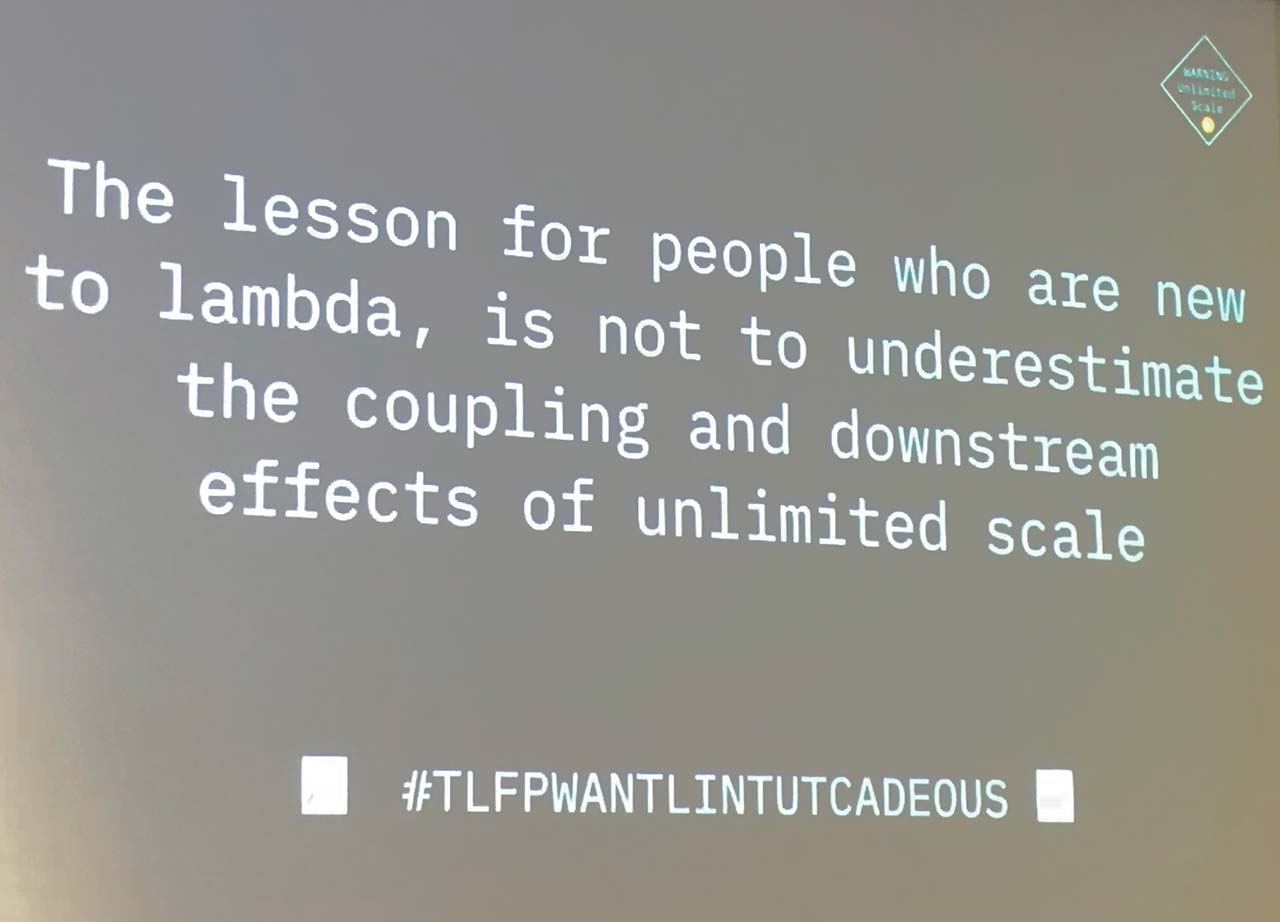

On unlimited scale...

"The lesson for people who are new to lambda, is not to underestimate the coupling and downstream effects of unlimited scale." The problem with the inherent scalability of serverless functions, is that most services they connect to are not as scalable. Whether you're connecting to a database, managed service, or third-party API, there will be a bottleneck somewhere. This needs to be planned for and properly managed.

On ways to fail...

Synchronous invocations, asynchronous invocations, dead letter queues, Kinesis streams, DynamoDB streams, and service limits everywhere! There are many, many, many ways in which your serverless application can fail. This is further complicated by the fact that "error handling for a given event source depends on how the Lambda is invoked." This is Jason's Rule #1 of Lambda Club. Sometimes retry logic is built in, sometimes not. Sometimes it retries 50 times, sometimes it retries 2. Sometimes it blocks further processing, sometimes it doesn't. Bottomline is that you need to read the docs and be prepared to handle the myriad of failure possibilities.

On service limits...

On an intentionally confusing slide, Jason points out that service limits are (not) always numeric, (not) always increasable, (not) increased automatically, and (not) increased instantly. There are a lot of service limits, particularly with AWS. There is even the case with ENI exhaustion that doesn't even generate logs in CloudWatch. So make sure your app can respond appropriately when you inevitably bump into one or more of these limits.

On IAM roles...

IAM is the new CHMOD. Astute point. For those of you familiar with Unix/Linux-style permissions, there is an interesting parallel between the two. Incorrect permissions are a common cause of applications failing to execute correctly and can also be a major security issue. IAM roles play a very similar function with Lambda and other FaaS offerings. This is also a major issue when testing your application locally versus in the cloud.

On testing your application...

"If you can't control how often something happens, fake it continuously in production." Jason makes the excellent point that we should be able to simulate events so that we can test our services as we are launch new versions. This means creating end-to-end tests that can be simulated in your production environment to make sure you catch issues before your users do. This is particularly helpful with canary deployments where you may only be replacing small parts of an overall flow.

The problem is data 📊

Gwen Shapira from Confluent (author of Kafka: The Definitive Guide) gave an excellent talk (video here) that focused on how we move data through serverless applications. Lots of interesting points, perhaps most importantly, that there are many possible ways to accomplish this.

On scalability...

The First Rule of Serverless: Don't Worry About Scalability. But, you really need to think about it. Gwen walked us through how we typically think a function will process an event and then how we think that processing will easily scale. The caveat is that the ability to scale depends on the source of the event along with the provider's scaling behavior. A common use case is to put Kinesis (or Kafka) in front of Lambda to benefit from sharding, instance reuse and batching, even if it introduces some latency.

On statelessness...

It is all fun and games as long as it is stateless. The problem is that state is often required for dynamic rules, data enrichment, and aggregation. Gwen pointed out that the typical way to handle this is to save and retrieve data from a cloud datastore (like Aurora Serverless or DynamoDB). Her advice was to "shape the data the way you want to query it." This is often a difficult concept for those familiar with relational database normalization, but avoiding the need to restructure data or perform JOINs (gasp!) will go a long way to making your applications more efficient.

On events...

FACT: Events are facts. Something happened! This is a concept that has long been embraced by analytics teams, and it makes a lot of sense for us to look at all data flowing through our systems this way. When a user places an order, or the order is processed or shipped, something significant happened to trigger those events. In our typical CRUD-based system, we would most likely update a master record in a database to capture these state changes. If we treat events as both a command and data, then we can maintain a history as well as mutate the state of our application.

On turning your database inside out...

This idea of capturing all these events first, and then processing them to mutate our application's state, leads us to the CQRS pattern (Command Query Responsibility Segregation). Using the CQRS pattern, we are separating our query model (that which might get accessed from a UI) and our command model (which would be how the system accepts and processes events).

There are multiple reasons why this is so powerful. For example, our events maintain their fidelity because we store them exactly as we receive them. They can also be multicast, allowing us to generate several query models for different purposes (web UIs, reporting engines, analytics, billing, etc.). Plus, they are replayable, meaning we can recreate our application's state simply by reprocessing our events. It is similar to Nordstrom's "distributed ledger" model and is worth looking into.

The Serverless Native Mindset 🤖

Ben Kehoe from iRobot gave a talk focused on how organizations should think about serverless.

On solving tech problems...

"You shouldn't have to solve your tech problems before you solve your business problems." Mic drop (or at least there should have been). This is a really important point. I've been on teams (and worked on side projects) that have taken months to figure out low-level technical problems that serverless (and many managed services) have completely abstracted away. If you added up the amount of time, energy and money development teams spend on reinventing the wheel, it would be staggering.

On using managed services...

You only know what the provider tells you. Trust is a journey. In order to focus on business value, we must outsource tech problems to the provider. This means you lose quite a bit of control. Ben points out some important things to consider with manage services, including the fact that you must accept the service for what it is today, because you can't make changes. And more importantly, you may not be able to remediate a provider's outage. The key here is that you already rely on many trusted providers to run your business, using managed services isn't much different.

On the term serverless...

"It's a terrible name. Nobody likes it, but we're stuck with it, so get over it." Ben suggests that the primary metric for the "serverlessness" of something is how managed it is, with the secondary metric being how closely does your bill match your usage. He also made a key distinction that both infrastructure servers (VMs) and applications servers (runtimes) must be managed to be serverless. He believes that containers or on-prem implementations are not serverless. If anything, this goes to show that the term is confusing at best, and may actually be a bit divisive.

On focusing on TCO...

"Your operations salaries should be in the same budget as your cloud bill." There is a common argument against serverless that purports that it is too expensive at scale. If a function is running all the time, it may be much cheaper to run a few VMs or containers to handle that steady load. Ben argues that we should be looking at the total cost to run our applications, including the operations team required to maintain the underlying infrastructure. There can be other costs too, so don't just look at the compute cost when making the comparison.

On going all-in with serverless...

The difference in operations between all serverless and mostly serverless is huge. Ben points out that going all-in with serverless isn't easy. He equates serverless applications to Rube Goldberg machines and admits that there is "always duct tape" involved, but that once you make it work, it is completely worth it. This is something I agree with whole-heartedly. Building a serverless application will test your ability to think through complex workflows and it may even take you a few tries to get it right. However, once you get through the learning curve on your first project, each successive one becomes exponentially faster to build.

Adopting Serverless with 80-Million Users 🌎

Tyler Love, the CTO of Bustle Digital Group, not only gave an excellent talk about how Bustle went fully serverless, but also may have won the best-dressed award. It was fascinating to hear him talk about Bustle's serverless infrastructure handling in upwards of a BILLION requests per month. He also showed us their fully serverless CMS and the dashboards they use to monitor their systems. He had some other insights as well...

On cold starts...

"Cold starts are not a huge issue for us." This is a common argument against serverless and it is generally hard to defend. Request-response applications can sometimes see 10 second latencies when cold starting a function. However, this is one of those things that scale tends to help with. The more your functions see traffic, the less likely these cold starts are. If they are not a problem for Bustle's 80-million users, then maybe we shouldn't worry about them so much. 🤷♂️

On JavaScript from top to bottom...

I'm a big fan of JavaScript, both for its frontend versatility and the power of Node.js on the backend. Bustle has adopted JavaScript for their entire stack, which gives their small team of developers the flexibility to work on both sides of the applications they maintain. They use a combination of React on the frontend along with server side rendering and edge caching to handle millions of requests per day. I think this is a great strategy for small teams. There are plenty of other languages that serverless functions support, but a homogeneous stack makes a lot of sense. And if Bustle is running it at scale, it deserves a closer look. 🔍

On log collection...

"It's hard to get anything interesting out of Elasticsearch." Bustle uses CloudWatch to collect logs and then uses a Lambda function to transform those logs and push them into Elasticsearch and Scalyr (although he did mention they were phasing out ES). This allows them to quickly search through aggregated logs. They also use Sentry for error tracking and aggregation. Key takeaway is that AWS's CloudWatch solution is not enough for applications at scale. This makes me wonder if other cloud provider's options are subpar as well.

On edge computing...

Bustle is experimenting with using CloudFlare workers to server side render React at the edge. This will make for a really interesting use case if they move it into production. AWS also has their Lambda@Edge service that is similar to CloudFlare workers. These services allow you to execute code at edge locations around the world, significantly reducing latency for your end users. I've heard a few horror stories about propagation times, but under the right circumstances, this could be a very powerful service.

Managing the gaps in the Serverless Development Lifecycle ⏰

Chase Douglas, the CTO of Stackery, gave his Is Serverless the New Swiss Cheese? talk. I've actually watched a video of him giving a similar version of this talk before, but there are several observations and ideas that are worth pointing out. The talk identifies four major gaps in the serverless development lifecycle that need to be addressed. He also gives us a few tips on how we can address them.

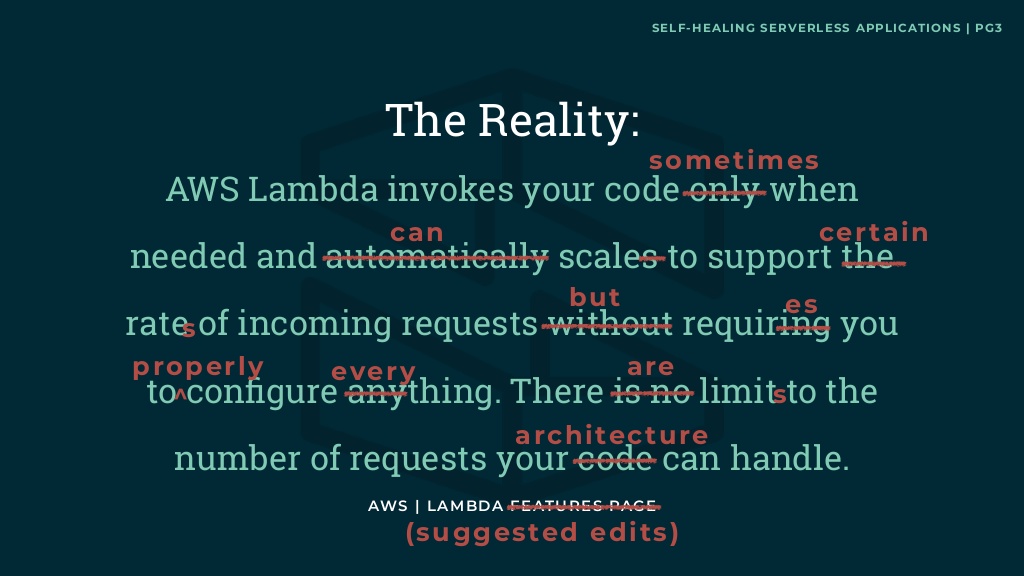

On the reality of AWS Lambda...

Chase made some edits to the AWS Lambda features page:

While this is a bit tongue-in-cheek, the reality is not quite as rosy as AWS makes it out to be. The key takeaway here is just to know that everything has limits. As Jason Katzer pointed out, service limits are inevitable and you must build your applications to handle failure.

On managing infrastructure as code...

Chase's main point here was about permission scoping and how difficult that can be to manage. There are hundreds (if not thousands) of fine-grained service permissions that can be granted to an IAM role. Many developers just add a "*" permission and call it a day, but that adds significant security risks. He also gave some useful tips on using CloudFormation conditions to help manage dependent resources. Other than using a framework to partially help you with this, I still think this is a major issue.

On collaboration mechanisms...

Serverless is cheap enough for every developer to have their own application instances. This is a great way to let your developers test their applications using live cloud environments. Chase simply stressed good naming conventions, but I think most development frameworks help you with that. Key takeaway here is that local development and testing can be difficult with serverless, so giving developers access to their own cloud sandbox is helpful and inexpensive.

On testing...

Serverless does not change testing, but it changes how you run tests. Unit testing in serverless should be the same as any other unit testing, but how do we run system and integration tests when cloud resources and managed services are involved? Chase explains that there are two schools of thought, mock and run locally, or run in the cloud. His advice is to run locally if it just API endpoints, but otherwise, deploy it and test in the cloud. I tend to agree. I like to mock some services to run my unit tests, but simulating events in the cloud, as Jason Katzer suggests, gives you a much better guarantee. Final thoughts from Chase on this, "with the right approach, serverless is just as testable as other architectures."

On monitoring and instrumentation...

Pick monitoring tools, ask everyone to instrument, and then pray. Short of implementing draconian measures that are sure to frustrate your development team, Chase suggests a better way to enforce instrumentation within your application. He uses some simple naming conventions and a framework to invoke an instrumented wrapper function that loads your service handler. As Kelsey pointed out earlier, there is a lot of boilerplate when creating serverless functions. This is a handy way to add some of that, as well as enforce other instrumentation rules.

The case for open source serverless 👨🏻💻👩💻

Dave Grove from IBM, David Delabasse from Oracle, and Yaron Haviv from Iguazio participated in a panel on Open Source Serverless. I found this conversation to be interesting, but also a bit frustrating. It was a hard to hear at times, but I'm pretty sure David from Oracle said that "web apps [in serverless] are an anti-pattern." I don't agree at all. I think it is one of the better use cases.

A big focus of this discussion was around standardization. They discussed the CNFC project to standardize events, and Dave Grove suggested that AWS is limiting themselves by not standardizing compute on Kubernetes. He also brought up some issues with OpenWhisk in that it can't guarantee an exactly-once execution model. Instead, they follow a "best try execution model." This isn't a good look for open source serverless in my opinion. Key takeaway here is that serverless implementations across providers are only the same in theory, but vary widely in their implementation. Something to keep in mind when choosing a provider.

The moderator, Asaf Somekh, asked the panel what their recommendations would be for organizations looking to start with serverless. Here were some of their suggestions, which seem to echo many of the suggestions from other speakers:

- Ask, why serverless?

- It's just another tool

- First have a use case in mind

- Don't rewrite the code you already have (use as glue)

- Don't take existing architecture and just rebuild it in serverless

- Rethink those parts of your app that could benefit from serverless

Wrapping up with a fireside chat 🔥

Kelsey Hightower came back to chat with Igauzio's CEO, Asaf Somekh. You can watch the video of this to see it in its entirety. There were a few things Kelsey said that got me thinking though, especially about barriers to serverless adoption. I jotted some of these down (not exact quotations) and I think they are worth sharing. I'm not going to comment on these, but instead, just put them out there. I'd like to hear your thoughts on these in the comments.

- If you put credit card data into an event, then all other functions can see that data.

- Under-utilization is not a problem. Who cares if it is 5%? Spin up and spin down saves you what, like 20 bucks? It's not a major concern.

- Containers are tainted because people think we need to install all this stuff. But serverless uses containers under the hood, it's just a different interface into it.

- Using FaaS with no events doesn't make sense.

- I will pay someone to watch my VM so I don't have to pay out $1M to a customer for downtime (as opposed to ceding control to a cloud provider's managed service).

Final Thoughts 🤔

I also left this conference a bit concerned about the future of serverless. Don't get me wrong, I am still an ardent supporter of serverless, but I think adoption is going to take a lot longer than I had originally believed. Several people mentioned the "serverless bubble" to me (though perhaps "serverless echo chamber" is a better way to describe it), and the more I look around, the more obvious that reality is. The vast majority of developers don't know what it is, and even those that do have yet to see (or embrace) the benefits. After listening to Kelsey Hightower speak, I can see how some of the benefits won't resonate with many organizations.

As serverless advocates, we need to do better. I'm not 100% sure what we need to do exactly, but there are several of us thinking about this. If you have thoughts on this, please share them with me.